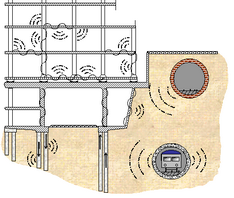

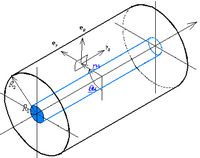

The PiP model is a fully-three-dimensionsional predictive model whose computational accuracy has been validated to within 1dB against other predictive models. For reference to the published validations see publications, an extract from which is shown as the last slide in the powerpoint presentation below.

Prediction Accuracy can't be better than 10dB ?

We believe that it is unreasonable to expect better than 10dB prediction accuracy from any predictive model. For a justification see this Powerpoint PresentationIn this presentation the PiP model has been used to vary certain parameters by a small amount, consistent with uncertainies in measured data. The predicted vibration levels vary significantly, often by more than 10dB. This error cannot be forecast.

In the presentation, changes are made to as set of arbitrary initial parameters (Case 1) as follows:

> Case 2. - soil parameters (Compressive and Shear Wave velocities and density changed by 15%) changes vibration prediction by up to 6dB

> Case 3. - bending stiffness of track slab increased substantially - increases vibration by up to 5dB

> Case 4. - mass of slab increased and natural frequency of slab decreased shows performance of Floating Slab Track

> Case 5. - measurement position changed by 5m horizontally, changes vibration by more than 5dB

So we have shown by example that if more than 5dB prediction error results from even small uncertainties in soil parameters and measurement position it cannot be sensible in general to rely on prediction models for accumulated accuracy better than 10dB. Of course there will be circumstances where data is known accurately and as a result prediction accuracy can be improved, but it will always be prudent to run the simulation a number of times with variation of the parameters (within estimated bounds of uncertainty) to assess the actual prediction error. Bear in mind also that features such as soil layering, ground water, piled foundations, voids adjacent to the tunnel etc etc can only be included in very sophisticated models. Such models are very difficult to validate, and their accuracy depends critically on the accuracy of the input data - and sophisticated models require a great deal more input data than simpler models.

While we are questioning the use of numerical models to predict vibration with great accuracy, we believe that modelling is very useful for assessing changes in vibration levels in response to small changes in model parameters. Models such as the PiP model are therefore useful for determining the performance of vibration countermeasures and to estimate insertion gain. The insertion gain is not nearly so sensitive to uncertainty, such as the exact determination of soil parameters.

Accounting for Uncertainty

The above highlights one of many sources of uncertainty in the process of using numerical models for the prediction of vibration from railways. Here is an attempt to distinguish between error that can arise at the various stages of modelling:1. - model assumptions (scientific issues): All modelling makes assumptions of the behaviour of materials under dynamic loading. The most important of these is linearity. Certain elements in the vibration path behave in a non-linear manner, e.g. rail pads and other rubber isolators, ballast and soil in large-amplitude motion. Saturated soils also change their behaviour in the presence of vibration. Most models do not take into account effects such as these, preferring to use the well-estyablished and well-understood equations for linear elasticity. As a result the predictions they make will be approximate.

2. - model correctness and convergence (coding issues): All numerical models are taking sets of coupled differential equations and solving these either in the frequency domain or the time domain. Many techniques involve making numerical transformations between these domains, and also between the space and wave-number domains. All models make a discrete representation of what is in fact a continuum. Models must also set boundaries on space, time and frequency (computers cannot count to infinity). In order to test the validity of these boundaries, it is necessary to carry out convergence tests - for example by reducing the mesh size or the time-stepping interval or the length of the FFT until the predicted results do not change.

Numerical models are also subject to error in the same way as spelling mistales can creep into any document. A stray minus sign, or a mistyped variable may not cause a computer programme to fail and it will produce seeimgly correct results.

It is necessary to benchmark numerical models against eachother in as many circumstances as possible so as to test their limits of applicability. If these limits are not known then it will not be possible to know when to expect the results to be in error.

3. - shoe-horning (fitting the model to the real world = user-oriented assessment): The model cannot account for everything. For instance, if the model includes soil layering then the gradual variation of soil properties with depth needs to be fitted into this discrete-layer structure. If soil layers in the model are assumed to be horizontal then some assumption has to be made to deal with inclined layers. And if the tunnel passes from one soil layer to another then how should the model best be used?

This all requires engineering judgement and different users of the software will make different assumptions, producing different predictions.

4. - data gathering: All models require data. Data from soils, foundations and buildings is difficult to obtain, especially where these data vary with depth or where geometrical data is simply not known. The condition of infrastructure can be difficult to assess as there may be cracks or voids that are not visible to the eye. If the data that goes into a numerical model is subject to uncertainty then the results that the model produces will also be uncertain.

5. - excitation: The model will make assumptions about the forcing function - i.e. certain types of trains running over certain types of track at certain speeds with certain roughness of rail and wheels. The predicted vibration will depend critically on this input. It must be realistic and it must cover and appropriate range of possibilities.

6. - measurement point: The observer may be a person sitting in their living room having a cup of tea, or a microphone in a recording studio or the target of an ion-beam diagnostic instrument. These all pick up noise and vibration in different ways and from different points in the room. Most models are not capable of assessing variation of vibration from place to place in such detail, so an allowance must be made for this kind of uncertainly. If a model does include variation in measurement point then an appropriate range of measurement points should be covered to give an indication of uncertainty and variability.

7. - validation (evaluation): A model must be objectively validated. Given the large number of sources for error listed here it is important to test the model in a number of different sites with different geologies, geometries and trackforms. All data collected must be included in the validation process, including data where agreement is poor. In this way a reasonable estimate of the statistical uncertainty can be gathered. It is unfortunate that only instances where agreement is good are generally published. This gives the impression that modelling accuracy is better than it is. Even so, the published literature is full of comparisons between prediction and measurement where agreement covers a range of +/-10dB .

How do other disciplines deal with uncertainty?

The procedure outlined above is well-established in other disciplines. A good example is set in the Proceedings of the International Workshop on Quality Assurance of Microscale Meteorological Models . Modelling fluid flows in and around cities (this is what microscale meteorology is, the models are used for environmental impact studies of pollution from waste incinerators etc.) requires the use of complex and validated numerical models. Here is a quote from Rex Britter, one of the editors of these proceedings:|

Models of whatever type are only of use if their quality (fitness for purpose) has been quantified, documented and communicated to potential users.

It may not be appropriate to talk of a valid model, but only of a model that has agreed upon regions of applicability and

quantified levels of performance (accuracy) when tested upon certain specific and appropriate data sets. Scientific and statistical

evaluations can enhance our confidence in models developed for environmental problems. Quality determination requires at least:

|

Our feeling is that we all fall very far short of the standards set here by the microscale meteorologists. As a community working in the area of vibration from railways, we do not have any agreed best-practice guidelines and we have no agreed strategy by which predictive models can be assessed for quality assurance.

The PiP model has been developed alongside collaboration with, and support from, the research team at K.U.Leuven led by Prof Geert Degrande. They have allowed us generous access to their FEM-BEM models in order to produce independent and robust model intercomparison for PiP.

Acknowledgement is also owed to our partners in CONVURT

![[Univ of Cambridge]](http://www.eng.cam.ac.uk/images/house_style/uniban-s.gif)

![[Dept of Engineering]](http://www.eng.cam.ac.uk/images/house_style/engban-s.gif)

![[Univ of Nottingham]](http://www.nottingham.ac.uk/~evzwwwin/images/vis-logo.jpg)